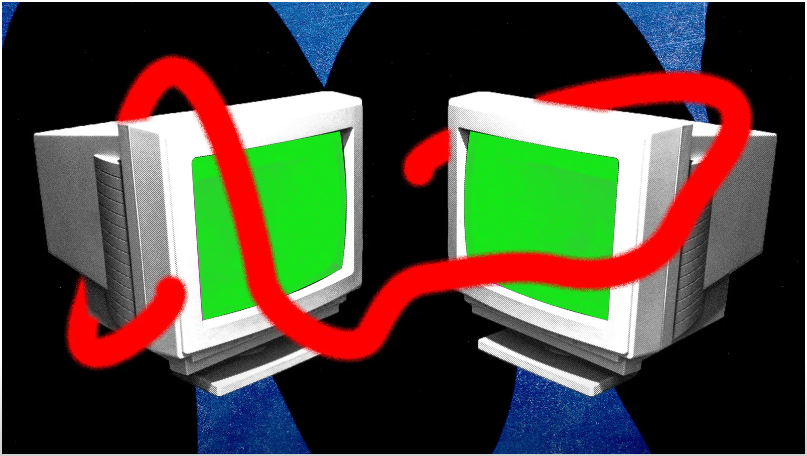

"Computer Worms Are Coming"

Security experts developed an artificial intelligence worm in a controlled setting, which has the ability to autonomously move between generative AI agents, potentially accessing data and distributing spam emails in the process.

AI systems such as OpenAI’s ChatGPT and Google’s Gemini improve, they are being used more frequently. Startups and tech companies are developing AI systems and platforms that are capable of handling mundane tasks for users, such as automatically scheduling appointments and potentially purchasing items.

AI System -however, as the tools are granted more autonomy, it also expands the possible methods in which they can be targeted.

Researchers have recently shown the dangers of interconnected, autonomous AI systems by developing what they believe are some of the first generative AI worms. These worms have the ability to move from one system to another, potentially harvesting data or distributing malware along the way. According to Ben Nassi, a researcher at Cornell Tech, this essentially means that individuals now have the capability to carry out a novel type of cyberattack that has not been witnessed before.

Reducing the danger

Even though computer worms have the potential to be alarming, experts believe that by using traditional security measures and carefully designing applications, these risks can be reduced.Adam Swanda, who works as a threat researcher at the AI security company Robust Intelligence, emphasizes the significance of secure application design and the necessity of human supervision in AI operations.Unauthorized activities can be greatly minimized by making sure that AI agents do not take any actions without explicit approval.Moreover, keeping an eye out for abnormal patterns, such as recurring prompts in AI systems, can aid in spotting possible threats early on.Ben Nassi and his team also emphasize the significance of developers and companies creating AI assistants being aware of their actions.

Ai System- Nassi, Stav Cohen, and Ron Bitton collaborated to create the worm named Morris II, as a tribute to the infamous Morris computer worm that disrupted the internet in 1988. In a research paper and website that WIRED exclusively obtained, the researchers demonstrate how the AI worm can target a generative AI email assistant to extract information from emails and send unsolicited messages, bypassing certain security measures in ChatGPT and Gemini.

Ai System- The study was conducted in controlled test settings and not compared against a widely accessible email assistant. This is significant because large language models are evolving to generate not just text, but also images and videos. Although generative AI worms have not yet been observed in real-world situations, many researchers warn that they pose a potential security threat that should be taken seriously by startups, developers, and technology companies.

Ai System- The majority of generative AI systems operate by being given prompts, which are text instructions that prompt the tools to provide a response or generate an image. On the other hand, these prompts can also be used as weapons against the system. Jailbreaks can lead to security rules being bypassed, allowing for the release of harmful or offensive content, and prompt injection attacks can enable a chatbot to receive covert commands. For instance, a malicious individual could conceal content on a website instructing an LLM to pose as a fraudster and request your banking information.

The researchers used an “adversarial self-replicating prompt” to develop the generative AI worm, which prompts the AI model to generate another prompt in its response. In summary, the AI system is instructed to generate additional instructions in its responses. According to the researchers, this is largely comparable to traditional SQL injection and buffer overflow attacks.

The researchers demonstrated the capabilities of the worm by developing an email system that could both send and receive messages using generative AI, integrating with ChatGPT, Gemini, and open source LLM, LLaVA. They later discovered two methods to take advantage of the system – by utilizing a text-based self-replicating prompt and by inserting a self-replicating prompt into an image file.

Securing Email Communication: Researchers Uncover Risks and Solutions in Advanced Computer worm

Ai System- In a particular case, the researchers, playing the role of attackers, sent an email containing a harmful text prompt to corrupt the database of an email assistant that utilizes retrieval-augmented generation (RAG), a method for LLMs to access additional data from sources outside their own system.

Nassi explains that when the RAG retrieves an email in response to a user query and sends it to GPT-4 or Gemini Pro to generate a response, it bypasses the security of the GenAI service and eventually extracts data from the emails. According to Nassi, when the response with the sensitive user data is created, it can infect new hosts when it is used to respond to an email from a new client and then saved in the new client’s database.

Ai System- According to the researchers, in the second approach, an image containing a harmful command causes the email assistant to automatically send the message to additional recipients. Nassi explains that by embedding the self-replicating prompt in an image, various types of images such as spam, abusive content, or propaganda can be shared with new clients after the initial email has been sent.

A video showing the study illustrates the email system repeatedly forwarding a message.

The researchers also claim they have the ability to retrieve information from emails. Nassi says that it could include names, telephone numbers, credit card numbers, Social Security numbers, or any other information that is deemed private and confidential.

Even though the study breaches some safety precautions of ChatGPT and Gemini, the researchers argue that it serves as a cautionary example of “poor architectural design” within the broader AI community. However, they shared their findings with Google and OpenAI.

Ai System- A representative from OpenAI stated that they believe attackers have discovered how to take advantage of vulnerabilities related to prompt injecting unfiltered user input. The company is currently focusing on improving their systems to be more resistant to such attacks and advises developers to implement methods to avoid working with potentially harmful input. Google chose not to respond to the research findings. According to the messages seen by WIRED, Nassi shared that the company’s researchers asked for a meeting to discuss the topic.

1. Discover the latest advancements in artificial intelligence with our in-depth coverage of AI worms and their potential impact on technology and society.

2. Explore the fascinating world of AI worms and their role in shaping the future of technology and innovation in our comprehensive guide.

3. Stay informed about the rise of AI worms and their implications for cybersecurity, data privacy, and the future of AI development.

4. Learn about the emerging field of AI worms and how these intelligent agents are revolutionizing the way we think about artificial intelligence and machine learning.

5. Uncover the secrets of AI worms and their potential applications in various

industries, from healthcare to finance, in our informative article.Read More –

I appreciate the depth of analysis in your article. It’s clear, thorough, and very informative.

Thank you very much Sir,